I work as a Systems Analyst at the International Livestock Research Institute (ILRI) in Nairobi, Kenya. In the name of alleviating poverty in the developing world, our scientists generate a lot of data about diseases, weather, markets, etc. Lots of data means lots of servers, routers, switches, etc and, because most science types aren’t tech savvy, I get paid to help them figure out what to buy, where to put it, and how to use it!

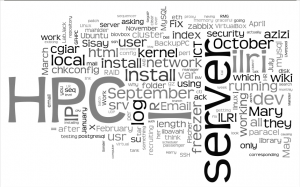

Because I’m super organized and love documentation, I keep a log of what I do from day to day; who I help, how I fixed a bug, what I installed, etc. I’ve been doing it for about a year and a half now, so I thought it’d be quite interesting to see what the data would look like if I used the word frequencies to create a word cloud.

Waaa! I’m jealous!

Quit whining, it’s easy. First, I fed my big “diary” of activities into Antconc to generate the raw numbers:

$ head -n 5 diary_wordlist.txt

10 164 HPC

11 155 I

12 155 org

17 109 server

27 81 ilri… then massaged them a bit to get a simple word:frequency format:

$ awk '{print $3":"$2}' diary_wordlist.txt > diary_wordlist_frequencies.txtRight now it’s technically ready to go, but there’s a lot of commonly-occurring junk words such as “to” and “from” which should be cleaned out or else your word cloud will be extremely underwhelming.

Clean up the junk words

I wrote a script which reads lines from a reference file (such as C keywords, common English words, etc) and strips them from my word list, strip_keywords.sh:

#!/bin/bash

# a space-separated list of files containing keywords

# we want to strip from the results

keywords_files="english.txt"

for keywords in $keywords_files

do

while read word

do

# delete lines containing keywords

sed -i "/$word\s\+$/d" diary_wordlist_frequencies.txt

done < $keywords

doneHapo sawa

Now paste that bad boy into Wordle and you'll be good to go.