I recently rolled out a new distributed model for our research computing cluster at work. We’re using GlusterFS for networked home directories and SLURM for job/resource scheduling. GlusterFS allows us to scale storage with minimal downtime or service disruption, and SLURM allows us to treat compute nodes as generic resources for running users’ jobs (ie, users’ homes are “everywhere”, so it doesn’t matter where the jobs run).

Click here for TL;DR. 🙂

Build it and they will come

When I first started playing with SLURM a few years ago, I was confused by the job scheduling terminology (queues, partitions, allocations, etc) as well as the commands for using the scheduler (salloc, srun, squeue, sbatch, scontrol, etc). If I, the systems administrator, was confused, how about the users?!

For the scheduler to work properly we all have to use it; it’s opt-in, and it doesn’t work unless everyone opts in! How can we efficiently allocate shared resources fairly between dozens of users unless we are doing proper accounting?

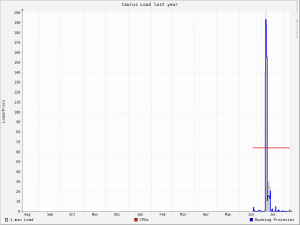

In July, 2013 one of our users got the parameters wrong in her batch job. The result was that the server was running at three times its capacity; 200 CPUs worth of load on a system with only 64 CPUs!

I decided that in order to get people to use the scheduler I had to make it easy for them.

“interactive” script

SLURM is good at launching thousands of batch jobs on thousands of compute nodes, but a lot of users just want to run something “right now” as they sit at the computer; usually something interactive, like BLAST, GCC, R, clustalw2 etc.

It’s really simple, you just type interactive:

[aorth@hpc: ~]$ interactive

salloc: Granted job allocation 3898

[aorth@taurus: ~]$ With one simple command I’ve asked the job scheduler to give me an allocation, and I’ve been seamlessly transferred from hpc (the head node) to a new shell on taurus (one of our compute nodes). You can see my reservation, along with several other users:

[aorth@taurus: ~]$ squeue

JOBID PARTITION NAME USER ST TIME NODES NODELIST(REASON)

3898 batch interact aorth R 0:03 1 taurus

3857 highmem fastqc.s emasumba R 12:57:33 1 mammoth

3893 highmem bowtie.s ndegwa R 5:05:11 1 mammothBy default it only allocates one CPU in the batch partition. You can override the defaults using the options specified in the help text:

[aorth@hpc: ~]$ interactive -h

Usage: interactive [-c] [-p]

Optional arguments:

-c: number of CPU cores to request (default: 1)

-p: partition to run job in (default: batch)

-J: job name

Written by: Alan Orth <a.orth@cgiar.org>It also does other neat things like forwarding $DISPLAY if your session is using X11Forwarding.

The code is just a few dozen lines of bash, and it's open source (GPLv3). You can find it on my github account here: https://github.com/alanorth/hpc_infrastructure_scripts/blob/master/slurm/interactive

Genius!